On Threads, Rotomonkey, a professional Environmental Technical Artist, has critiqued this new Sora generative AI video clip.

Not so much on the realism of it, but on the usability of the AI system within a production process of image imagination, option creating and editing of possibilities. The mixture of art and management that underlies the making of movies, games and adverts.

This screen grab is from within the whole discussion. It is about not the creation of new imagery but the editing and adjustment to get the right imagery. This is negotiation between different stakeholders about what is good or bad, what might work better, what is needed (or not) and so on. It is a mixture of verbal conversation, text notes, mood boards and rough sketches. AI is currently great at slinging together some new images but it is terrible at editing down one image to the specifications of many stakeholders.

What is wrong is there is a fundamental misunderstanding of what language is good at and why it is not a good user interface.

AI engineering is smooshing several things together to get the wrong answer

- They recognise how important conversations between artists, designers and managers are as they try to create shared imagery and narratives.

- They understand how code and software are ways of creating pixel-perfect user interfaces and controlled user experiences.

However, that does not mean that text-prompted AI is the clear solution.

The barrier for this kind of generalised text-based systems is language itself.

Let’s look at some criminals to help explain why.

Whodunnit?

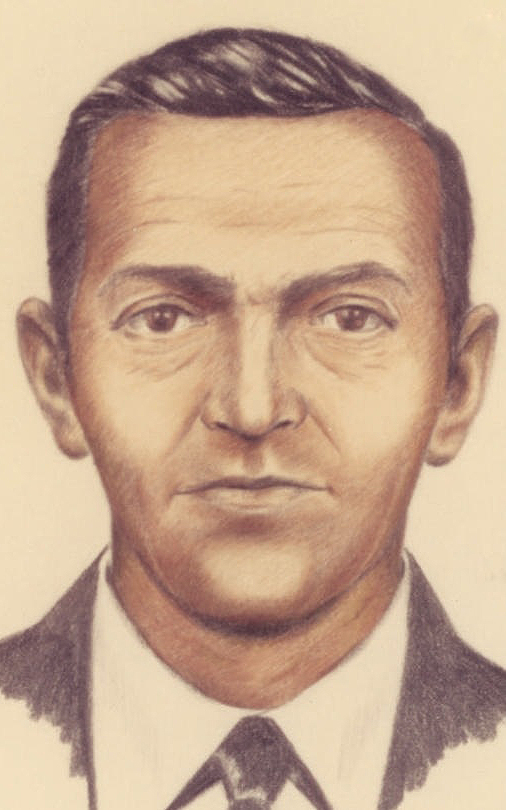

The image above is the FBI composite of D.B. Cooper. He successfully hijacked an aircraft and escaped with the ransom money in 1971 (maybe, the case is still open).

Composite sketches and the cruder but easier to deploy PhotoFit/Identikit systems are used by law enforcement agencies to describe wanted suspects. They are simple and direct.

Meanwhile police and newspapers still put out text descriptions of suspects. The problem is they are almost always completely useless. ‘Medium height man, dark hair, in dark clothing’ is fairly much all our local paper says about someone trying to break into a coffee shop one night. The language is precise yet vague.

Language is not good at describing what people look like. The number of words to describe just the nose is limited and their mutual understanding is even worse. I cannot tell you what my nose looks like. I could sketch it, show you something like it or tell you to think of a famous person I think has a similar nose.

Language is limited in specific ways and that affects its usability.

What is language good for?

Nick Enfield’s Language vs. Reality is a useful book on the issues of what language does well (or not).

If you cannot afford the book or just want a quicker introduction then there is a 30 minute interview on Word Of Mouth which is how I first learnt about him.

The book’s subtitle provides the core point

Why language is good for lawyers and bad for scientists

Language vs. Reality

Language is not a precise tool. It is not a direct code. It is not a solid architecture.

It is a social tool for negotiating and sharing meanings. It flexs around metaphors and analogies in different communities with diverse backgrounds and experiences. The words have dictionary definitions but are never definite. People choose to use and misuse words in order to find ways of sharing meanings and actions with other people.

- That is great for poets and authors as they find new ways to describe human experiences.

- That is great for lawyers as they explore novel ways to both argue for and against legal precedents.

- That is bad for scientists trying to describe exactly a particular thing in a specific context.

- That is bad for generative AI systems using text to edit imagery

Language helps people align their cognitive and emotional systems in social contexts.

Organisations and governments try to impose exact meanings using authority and tone of voice but that is no more natural language than computer code. It is a specific structure to ensure direct communication in the most efficient way (like a fast food place menu tries to do but a fancy restaurant menu often tries not to do).

Using language as the interface is not always efficient. The current problems with generative AI show how it is both inefficient in creation (many images but all with oddly wrong details) and editing (no way of coherently conversing about edits and adjustments). People are offering Prompt Guides but these are the fast food menus of AI: simple, efficient but very, very limited.

Perhaps we end up with AI held tightly within the kind of structures we have in Excel. Many menus and tools to do specific actions. Perhaps we end up with AI hosted in some virtual play space where everything can be loosely grasped and toyed with.

How we relate to AI in the long term will change. Text is the first step. Better visual interfaces will come. The current situation will change but only if engineers and organisations recognise what language is doing.